Adobe MAX 2025 Sneaks: Inside the Research Showcase Where Innovation Meets Comedy

Adobe Sneaks brings together research innovation and live comedy as engineers demo experimental tech on stage.

Yesterday at Adobe MAX 2025, I attended a private Q&A with Gavin Miller, Adobe’s Head of Research, followed by the annual Sneaks showcase where engineers demo experimental projects that might someday become real products. It was a rare peek behind the curtain at how Adobe turns early ideas into working magic.

Adobe Sneaks is an annual tradition at MAX where researchers get on stage in front of 10,000+ people and demo experimental projects that may (or may not) eventually ship as product features.

The format: a comedian hosts, engineers present their prototypes live, and the audience reacts in real time. Think TED Talks meets stand-up comedy meets science fair. This year’s host was Jessica Williams (comedian/actress), and she was brilliant - witty, quick with the commentary, and made what could’ve been a dry tech demo feel like a show. The crowd was fully locked in and the vibes were immaculate.

The projects shown at Sneaks come from Adobe Research, but also from product teams and collaborations across the company. They go through a juried selection process where proposals (with demo videos) get reviewed by researchers, product managers, and execs. About 200 submissions get narrowed down to the handful you see on stage.

Some past Sneaks have shipped (like Content-Aware Fill started as a Sneak). Others were too experimental or ahead of their time. But the point isn’t to promise features - it’s to show where Adobe’s research is headed and get immediate audience feedback on what resonates.

It’s part product roadmap preview, part research showcase, part entertainment. And it was followed by the MAX Bash concert and party outside. For someone who has been playing with Photoshop for 24 years, daydreams of being a comedian, and thinks about AI systems all day - it’s basically my idea of a perfect evening.

TL;DR: I attended a Q&A with Adobe’s Head of Research, then watched experimental AI projects demo live on stage with a comedian. Here’s what I learned about Adobe’s research process and the wild tech they’re building:

Inside the Research Process - How 200 proposals become 5 demos

Controlling Light After the Shot - Add lighting and remove objects with AI that understands reflections

Video and Animation Get Smarter - Edit one frame, update entire videos + AI that plays chess

Sound Design and Dialogue Cleanup, Automated - Generate soundscapes and edit audio by editing text

Inside the Research Process

Before the show, I joined a small group of journalists, creators, and industry analysts for a Q&A with Gavin Miller, Adobe’s Head of Research. Here’s what most people don’t know:

About 200 proposals get submitted each year. They come from Adobe Research, product teams, and collaborative efforts across the company. Each submission includes a demo video because it’s easier to prototype things now than it used to be. Proposals are reviewed by researchers, technologists, product managers, and senior execs who each pick their top 25.

Then a smaller group narrows it down to the handful you see on stage, balancing two criteria: excellent and interesting, plus coverage across creative domains. They don’t want everything to be imaging or everything to be video - they’re thinking about the MAX audience experience.

What struck me about this process is that by the time a project reaches the Sneaks stage, it’s been seen, evaluated, and championed by dozens of people across the company. It’s not one researcher’s pet project anymore - it’s something that multiple teams have looked at and said ‘yes, this matters.’ The excitement in the room during Sneaks isn’t just audience reaction to cool tech. It’s the culmination of months of internal momentum, collaborative refinement, and collective belief that this idea deserves the spotlight. That’s what makes the energy different from a typical product demo.

Here’s the part I love: Adobe Research doubles in size every summer with interns. And about 90% of features that eventually ship either started as or involved an intern project along the way. Not every intern project ships, but the ones that do often started in that exploratory phase. It’s a leveraged way to explore many ideas and gradually filter down to the ones worth developing.

The live demos are high stakes. You’ve got researchers - often shy, technical people - presenting experimental tech in front of 10,000 attendees, with a comedian providing real-time commentary. Gavin mentioned that even when demos crash, the audience cheers. It’s become a wonderful tradition. But what’s less visible is that product teams are literally taking notes during Sneaks on audience reactions. If something gets a strong response, it can fast-track that project’s development.

One technical detail Gavin mentioned that’s relevant to what you’ll see: they’re combining technologies like Gaussian splats (a way to represent 3D objects using elliptical shapes in space) with generative AI models. The splat gives you real-time 3D interaction, then the AI adds photorealistic detail back in. It’s not just one breakthrough - it’s orchestrating multiple technologies together into seamless workflows.

They’re also thinking ahead about ethics. Adobe Research is actively working on watermarking AI-generated content and building detection methods to prevent misuse. When you can change how things sound or look this dramatically, safeguards matter.

Adobe Sneaks: The 2025 Lineup

Here’s what they showcased this year at Sneaks:

Controlling Light After the Shot

Project Light Touch

Imagine professional lighting that you setup after your photoshoot. Wild, but stick with me here. Project Light Touch isn’t about adjusting exposure or adding a filter. It lets you add actual light sources to an image - like you have a personal orb of light you can drag around and place anywhere in the scene to adjust the intensity, color, and depth.

In the demo, they took a photo with a bowl in it and added light into the bowl. Then they changed the color of that light. And the shadows updated, the reflections changed, the whole lighting logic of the scene adjusted accordingly.

You can turn day into night, add dramatic lighting from specific angles, change the entire mood and focus of an image - all in post. It’s like having control over the sun and studio lights after the shoot is done.

This is the kind of thing that normally requires 3D rendering or reshoots. Now it’s just: place light orb, adjust parameters, done.

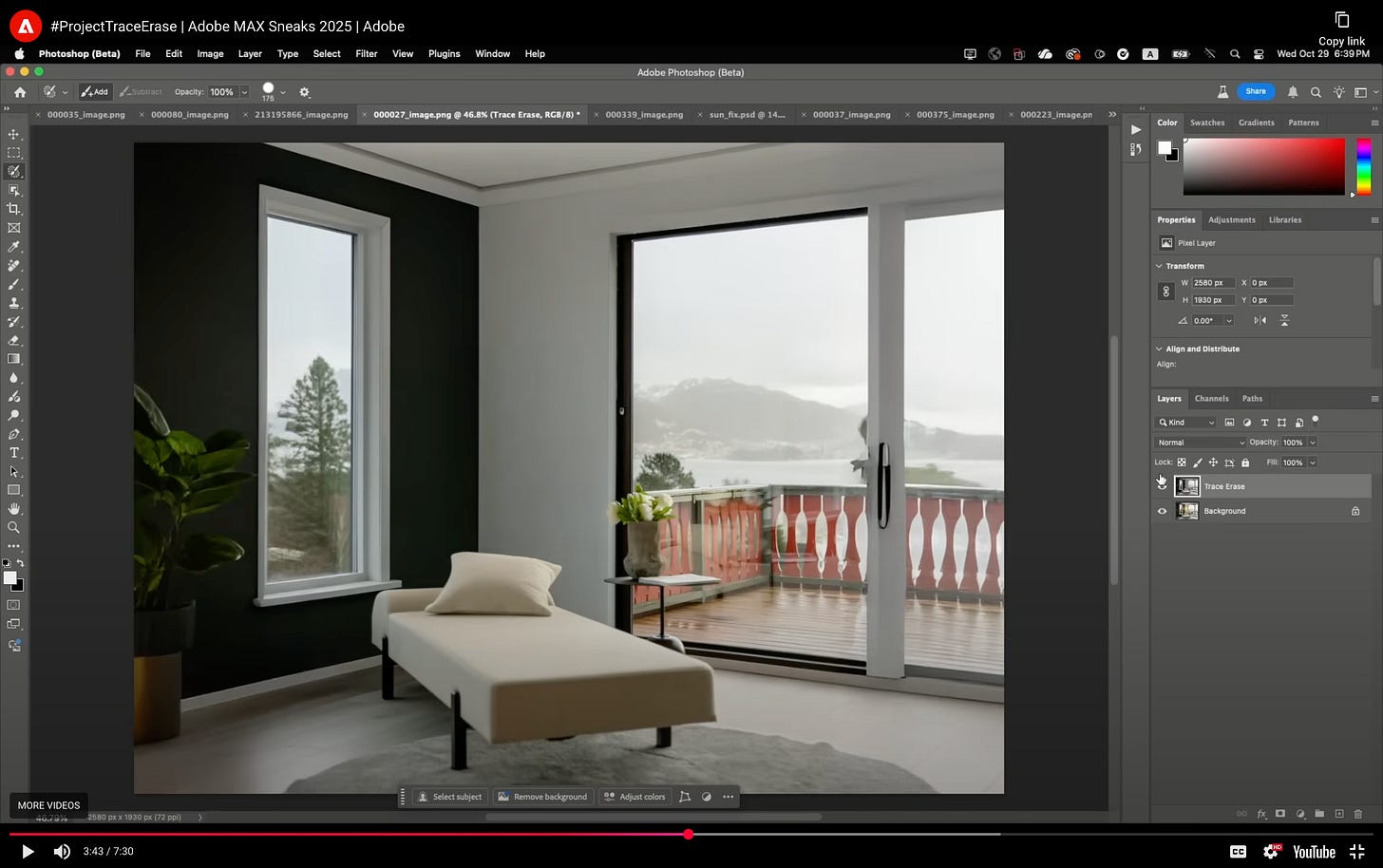

Project Trace Erase

Object removal that understands lighting and reflections, not just pixels.

In the demo, they removed a lamp from a room. Standard content-aware fill would just patch over where the lamp was standing. Project Trace Erase understood that the lamp was also casting light on the wall and creating a reflection in the window.

So when you erase the lamp, the tool automatically removes the warm glow it was creating on the surrounding surfaces AND the reflection in the glass. It’s analyzing the scene’s lighting logic and removing the cascading effects of that object, not just the object itself.

This is the difference between “fill in this empty space” and “understand what this object was doing in the scene and undo all of it.” Remove a candle, the surrounding glow disappears. Remove a mirror, the reflections it was creating are gone too.

It’s treating images like 3D environments with lighting rules, not just 2D pixel arrays.

Video and Animation Get Smarter

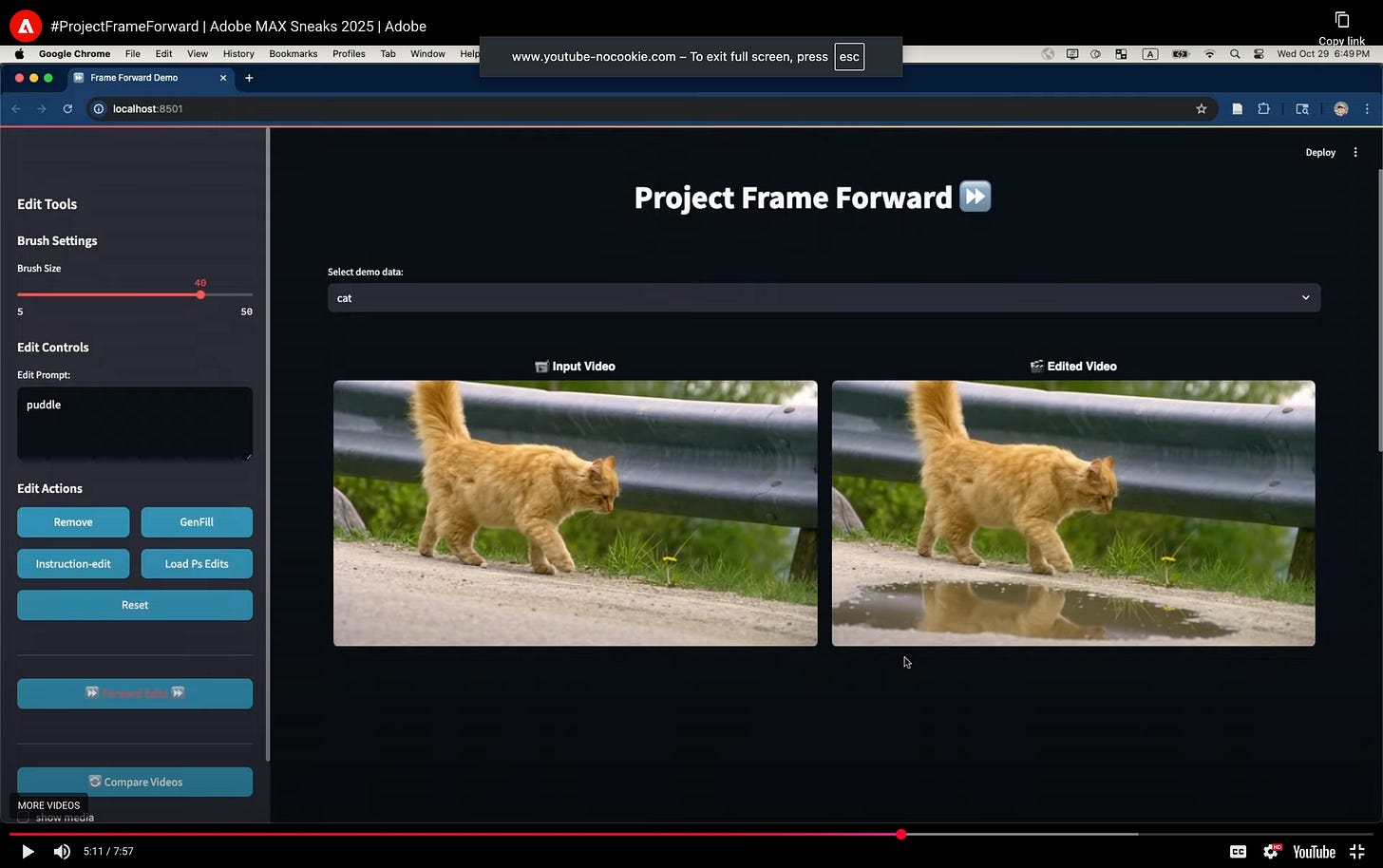

Project Frame Forward

Edit one frame of video with text prompts, and it applies those changes across the entire clip. Takes the precision you get in photo editing (where you can tweak individual elements) and brings it to video workflows.

In the demo, they had footage of a cat walking. On a single frame, they added a prompt: “puddle.”

The AI didn’t just paint a puddle on the ground. It understood that puddles reflect things. So it added the puddle AND the cat’s reflection in it - then propagated that across every frame of the video as the cat moved. The reflection updated with the cat’s position and movement throughout the entire clip.

One text prompt. One annotated frame. The whole video updates with physically accurate results.

This is bringing photo editing logic to video - where you can make targeted changes without manually tracking objects frame-by-frame or spending hours in After Effects. The kind of edit that normally takes significant time and technical skill, now happens in seconds.

Project Motion Map

Takes static vector illustrations and animates them automatically. The AI analyzes the graphic and figures out how things should move based on the composition.

During the demo, they showed a chess board graphic. The prompt wasn’t “move the knight to E4” or detailed animation instructions. It was just: “finish the game in 4 moves.”

The AI understood the rules of chess, analyzed the board state, and animated an actual chess game that reached checkmate in exactly 4 moves.

Jaw. Dropped.

This isn’t just “make things wiggle” animation. The AI understood the system it was working within, the constraints of the game, and the objective. No keyframes, no manual rigging, no explaining chess rules to the software.

Sound Design and Dialogue Cleanup, Automated

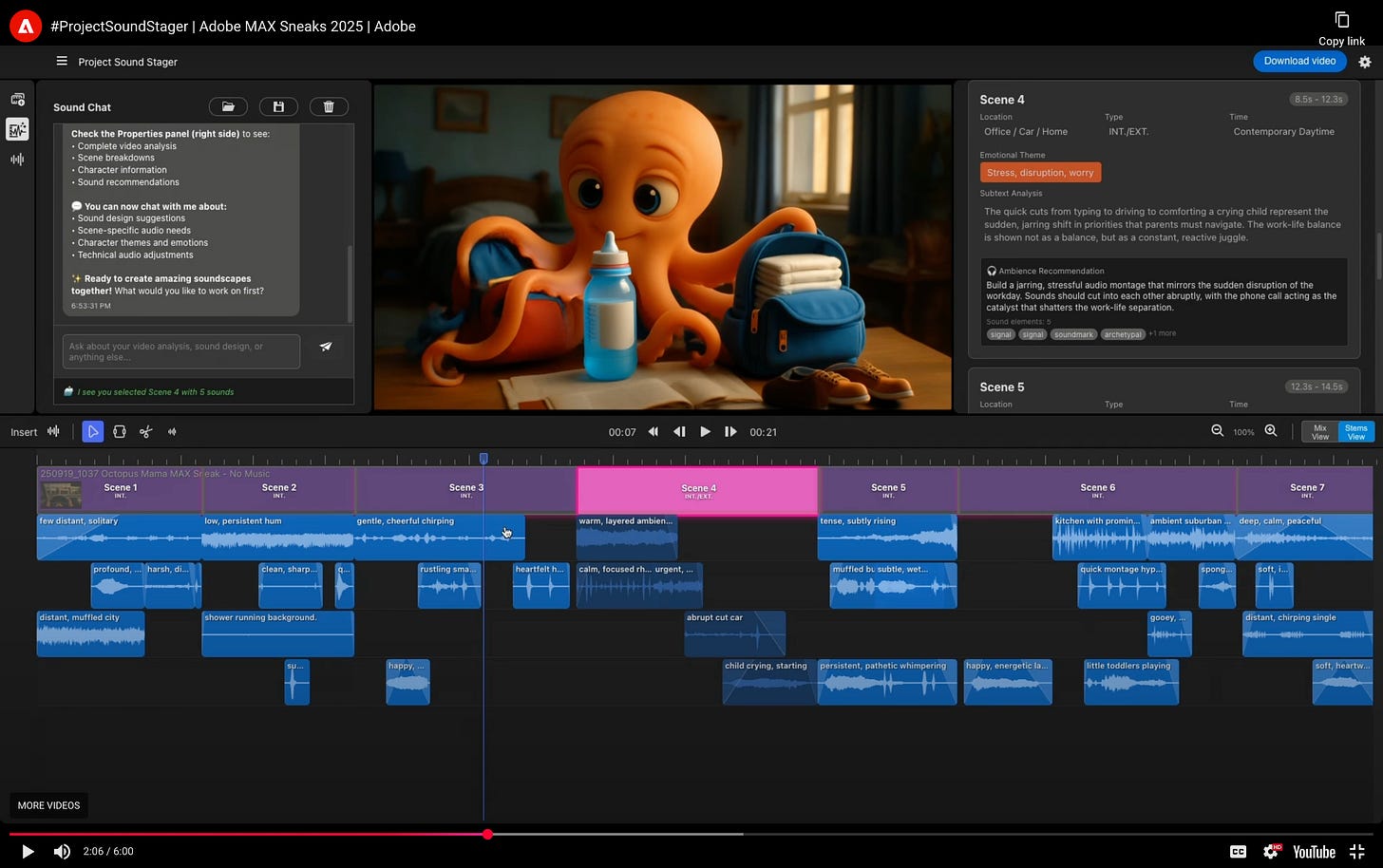

Project Sound Stager

Generates layered soundscapes by analyzing your video’s visuals, pacing, and emotional tone. Uses sound design principles to build the audio, then you work with it conversationally to adjust the mix.

In the demo, they uploaded an animated movie (with no sound). The AI analyzed the footage and automatically broke it down into scenes - identifying emotional themes like “stress, disruption, worry” for one scene and “calm, focused rhythm” for another. Then it generated sound recommendations as individual layers on a timeline.

You could see the full soundscape broken out: ambience tracks, specific sound effects (baby crying, alarm clock beeping, phone ringing), background elements (distant city, shower running). Each sound appears as its own editable layer that you can adjust, replace, or remove.

The interface lets you chat with an AI sound designer. You can say “make this scene more tense” or “the alarm is too loud” and it adjusts the mix accordingly. Or you can manually tweak any individual sound element.

This is taking the generative approach we’ve seen in image and text generation and applying it to professional sound design - giving you a starting point that understands scene context, then letting you refine from there.

Project Clean Take

You know when you’re recording a podcast or video and you don’t notice until AFTER you’re done that there was a very clear noise in the background the entire time? That nightmare scenario is what Project Clean Take solves.

They showed footage of someone talking on a bridge. Mid-sentence, a bridge bell started ringing in the background - loud, persistent, impossible to ignore. In Project Clean Take, the audio is displayed as layers: Speech, Ambiance, SFx, Music, Reverb. Each layer can be isolated, adjusted, or removed independently.

But the more interesting part: you can edit the transcript text, and the audio updates to match. Fix mispronunciations, change words, refine delivery - all by editing text. The AI regenerates that portion of the audio in the speaker’s voice with the corrections applied.

It’s dialogue editing that works like document editing, plus granular control over every sound element in the mix.

Check out the full demo videos for each of these projects on Adobe’s blog.

This is just the beginning. Tomorrow I’ll be breaking down everything else announced at Adobe MAX 2025: the actual product updates, my hands-on testing with Firefly and Premiere mobile, and what the multi-model approach means for creative workflows. Stay tuned.

Tiffany, what I love about your work is how you turn research into story — not just explaining innovation, but staging it, making us feel its pulse. You give us access to spaces that usually stay hidden, and that generosity changes everything. ✨