CES 2026: AI Left the Chat

Now it's running your operations, cutting cloud costs, and living locally on your laptop.

Stop renting intelligence. Start owning it. How the shift to local AI cuts your cloud costs and secures your data.

This year at CES, AI got physical.

Robots folded laundry and timed chores to your schedule. Cars reasoned about their surroundings before acting. Laptops ran 200-billion-parameter models with no internet. Appliances configured themselves from natural language.

The chatbot era trained us to think of AI as text in a browser window, a conversation partner that lives somewhere else and sends responses back.

You type, it responds, you copy the output into whatever you’re actually working on. The AI lives in the cloud, processes your request on hardware you’ll never see, and returns a result. That model worked for generating text and answering questions, but it had hard limits: the AI couldn’t do anything in the physical world, and it required sending your data to external servers.

CES 2026 made the shift undeniable: AI is now running on hardware you own, in environments you control.

The conversation has moved from what AI can say to what it can do. And it showed up across robots, cars, chips, and appliances operating in your space, not just replying in a browser tab.

Here’s what mattered most from the show floor and why it changes your costs, capabilities, and workflow.

The Drop

AI moved out of the cloud and into your physical space, running on hardware you own, not servers you rent.

New chips and architectures (NVIDIA Vera Rubin, AMD Ryzen AI 400) make agentic, on-device AI the default, cutting costs and keeping your data local.

Robots and appliances now perceive, reason, and act: from CLOiD folding laundry to TVs answering questions without interrupting your show.

The home, workspace, and devices around you are becoming adaptive systems that learn your patterns and operate on their own.

Your Cloud Bill Just Became Optional

While everyone else was playing with AI companions, I found the signal that changes how you spend on AI this year. The era of renting intelligence is over. The era of owning it just began.

The infrastructure announcements from AMD and NVIDIA shift AI processing from cloud-first to local-first. Video generation, image recognition, and 200-billion-parameter models now run on hardware you own. No per-token fees. No uploading client work to external servers. The compute layer that powers your AI tools just moved closer to your desk.

NVIDIA’s Vera Rubin is their next-generation AI platform. It runs large models faster, with more memory and less power draw. Expected availability: second half of 2026.

Why this matters for your business: Vera Rubin is built for agentic AI: the kind that doesn’t just answer questions but actually does things. Workflows, automations, multi-step reasoning. And it does it at a cost structure that finally makes sense for mid-sized businesses, not just enterprise giants.

This is the beginning of the end for the “token cost is too expensive” excuse.

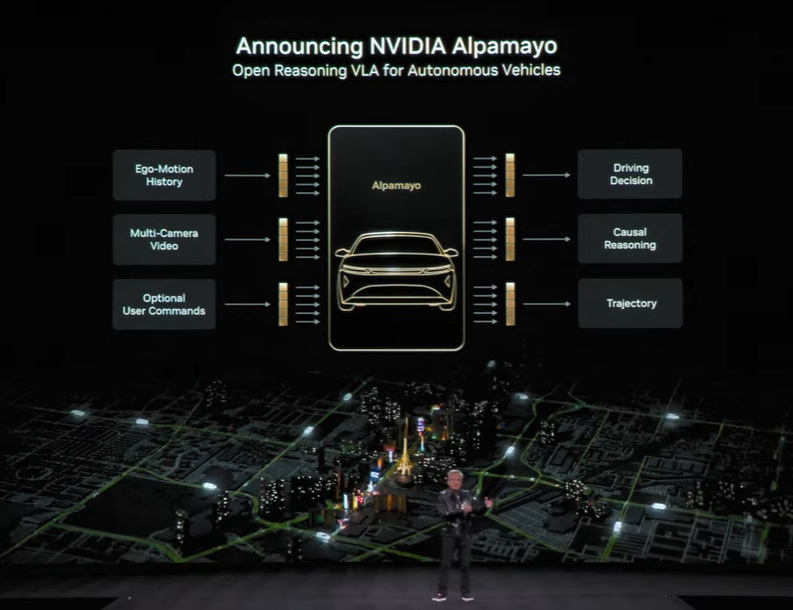

Then there is Alpamayo, NVIDIA’s 10-billion parameter model for autonomous driving.

The Critical Shift: This isn’t a chatbot that answers questions about cars. It is a Vision-Language-Action (VLA) model.

Chatbots (Old): Wait for a prompt -> Generate text.

Alpamayo (New): Sees the road -> Reasons about traffic -> Takes the wheel.

It doesn’t just ‘perceive’ the environment; it makes the decision to hit the brakes or change lanes. This is the move from AI that responds to AI that operates.

It sees the road, understands context, and acts. That’s the trajectory for physical AI broadly: systems that don’t wait for prompts but perceive environments and take action within them.

They also had Serve Robotics delivery bots rolling around the booth. These are the autonomous sidewalk robots you may have seen in cities like Los Angeles and Miami, handling last-mile food delivery without a driver. Over 1,000 robots operating across five cities, completing more than 100,000 deliveries. The robots simulate in NVIDIA’s Isaac Sim platform and run on Jetson Orin edge AI hardware. Again, the pattern: AI that operates in physical space, powered by local compute.

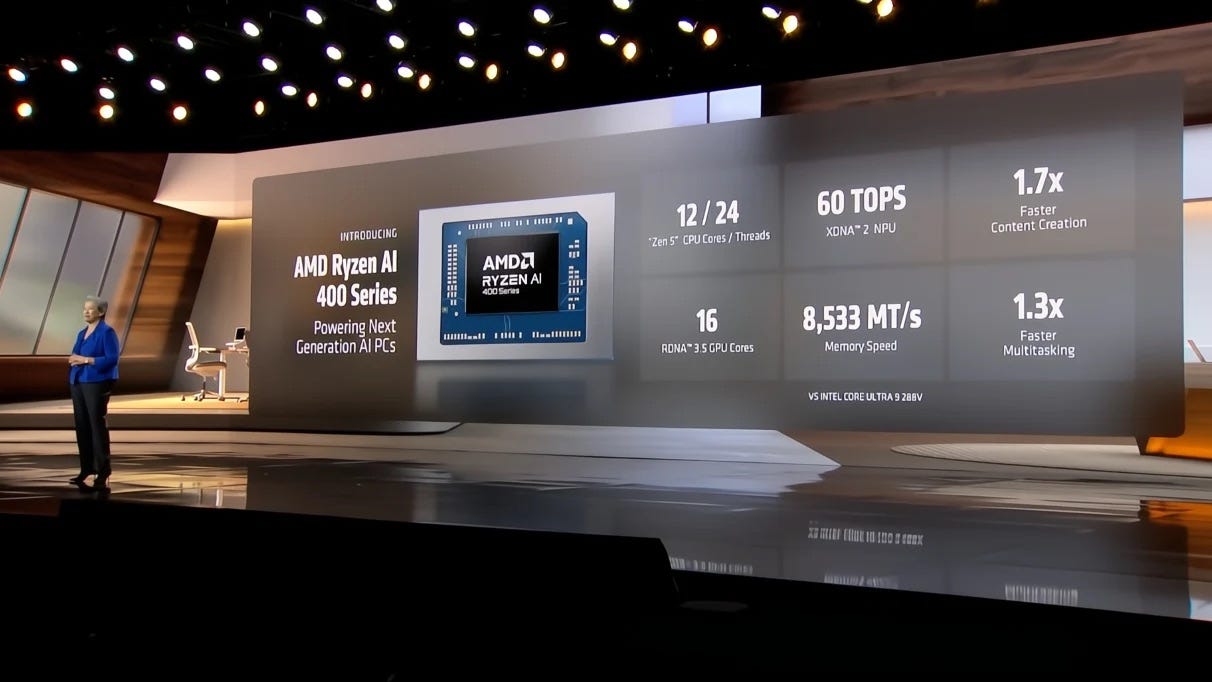

AMD showed up with the Ryzen AI 400, and for anyone handling sensitive IP, the implications are massive.

This chip moves video generation, image creation, and voice synthesis directly onto your device. That means:

Zero Fees: No per-token API costs. No monthly subscriptions.

Zero Latency: No waiting for a server farm in Virginia to render your request.

Zero Risk: You aren’t uploading client IP to someone else’s server. Private data stays private.

You finally have the option to bring critical workflows entirely in-house.

Laptops with Ryzen AI 400 are coming early 2026.

The throughline across all of this is optionality. You can still use cloud services when they make sense. But you’re no longer locked in. Local video generation means you control the output and the cost. Local image recognition means sensitive data stays on your hardware. The infrastructure exists to choose.

If you’ve been paying monthly for cloud rendering or worried about IP security on hosted models, that math is about to change.

AI Just Learned to Fold Laundry

Robots at CES are nothing new. Every year brings another humanoid prototype or warehouse automation demo. What’s different this year is that the robots are actually doing household work, and they’re designed to integrate with appliances you already own.

Enter LG’s CLOiD.

It folds laundry, retrieves food, preheats the oven, and learns your schedule. It’s part of LG’s “Zero Labor Home,” coordinating across washer, dryer, fridge, and oven.

This isn’t a set of routines; it’s pattern learning. CLOiD builds a model of your household over time and adapts to it.

CLOiD connects to LG’s ThinQ ecosystem: washer, dryer, fridge, all coordinated. LG is calling it the “Zero Labor Home” vision: robotics plus connected appliances plus physical AI.

LG calls this the ‘Zero Labor Home.’ It’s not just a robot; it’s a connected system that reclaims time:

Laundry: Folded while you’re out.

Meal Prep: Ovens preheated, ingredients retrieved.

Coordination: Appliances talking to each other, not you.

The math: around 10 hours of domestic labor reclaimed weekly.

Let’s be real: 10 hours per week is a part-time job. It’s the time you could spend on your business, your creative work, your family, or just not being exhausted at the end of every day.

Pricing and availability for CLOiD have not been announced yet.

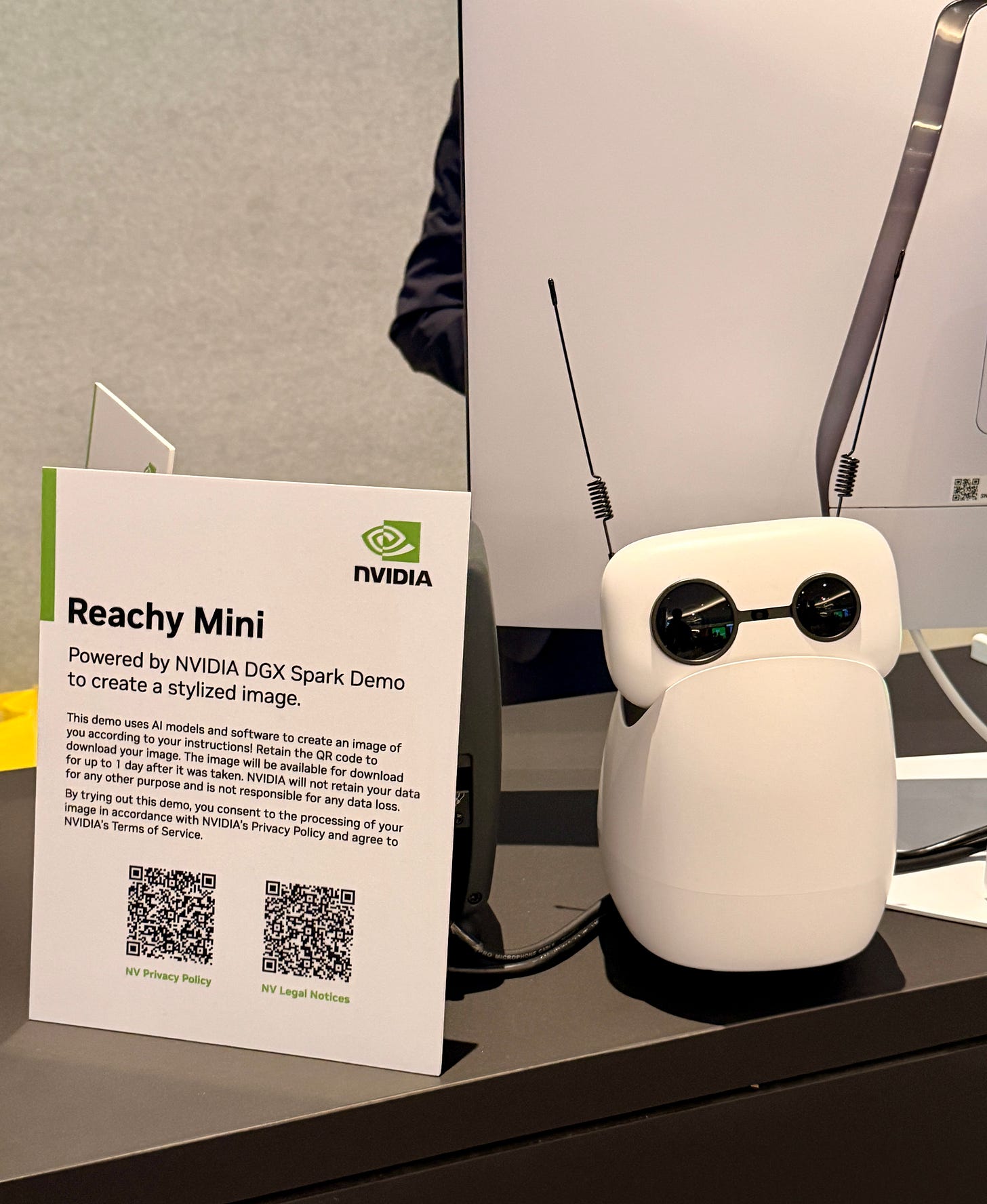

NVIDIA also showed Reachy Mini, a Hugging Face collaboration that’s a desktop robot you can talk to. Physical AI for your workspace. It’s early, but the direction is clear: AI that perceives, reasons, and operates in real-world environments.

Their push into open source models, simulation in Isaac Sim, and Jetson Orin edge platforms tells the same story. The bet is on physical AI, and they’re handing developers the tools to build it.

Even the robot lawnmowers got smarter. Sensors now detect obstacles (including dog poop) so it doesn’t drag problems across your yard. Not glamorous. But genuinely useful automation that runs without you.

Ambient Intelligence at Home

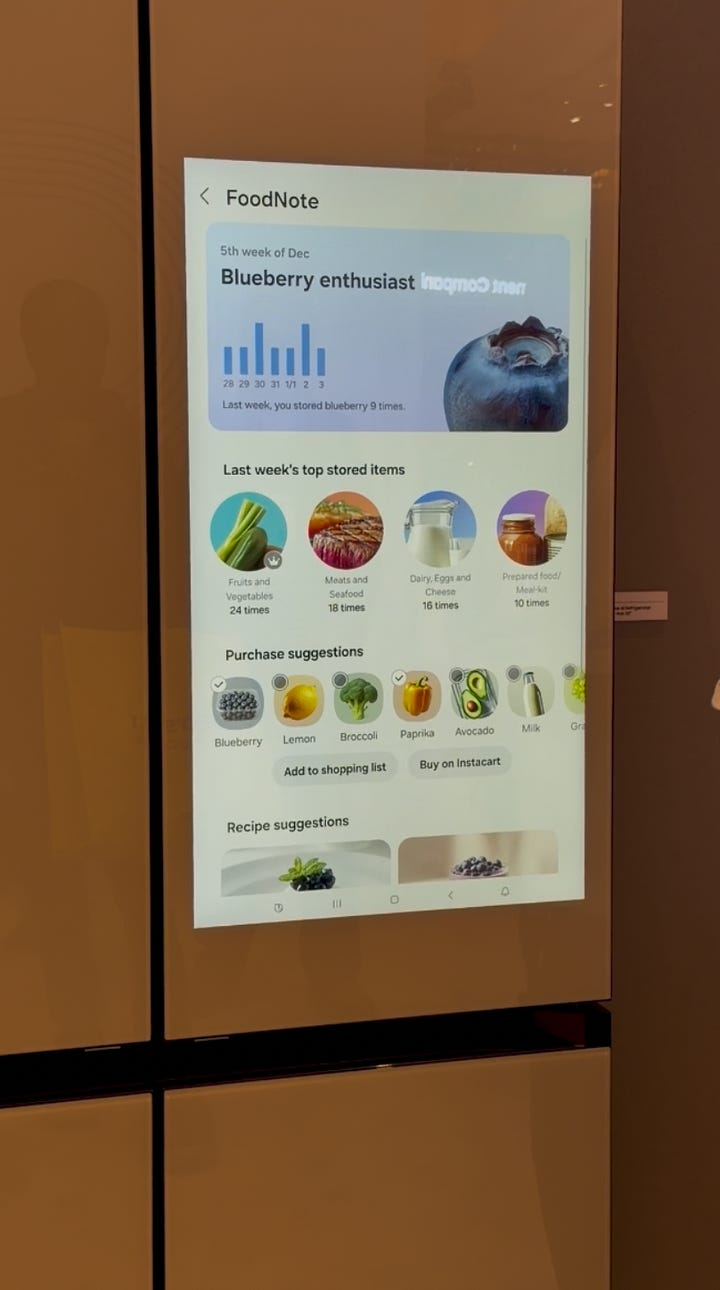

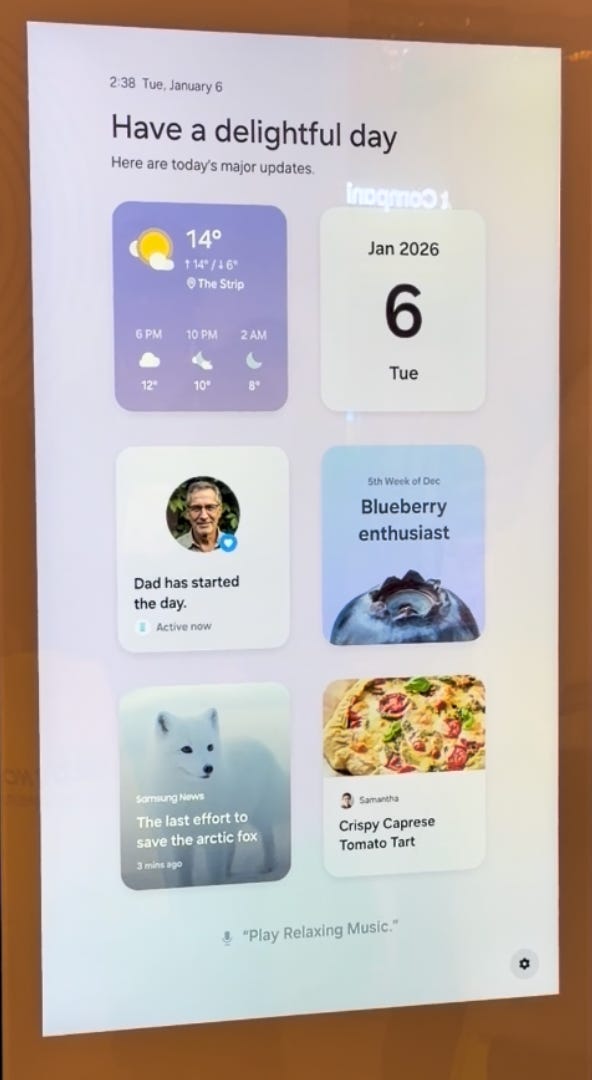

Samsung showed AI embedded in appliances you already have in your house, making them more useful without requiring you to learn new interfaces or change your habits.

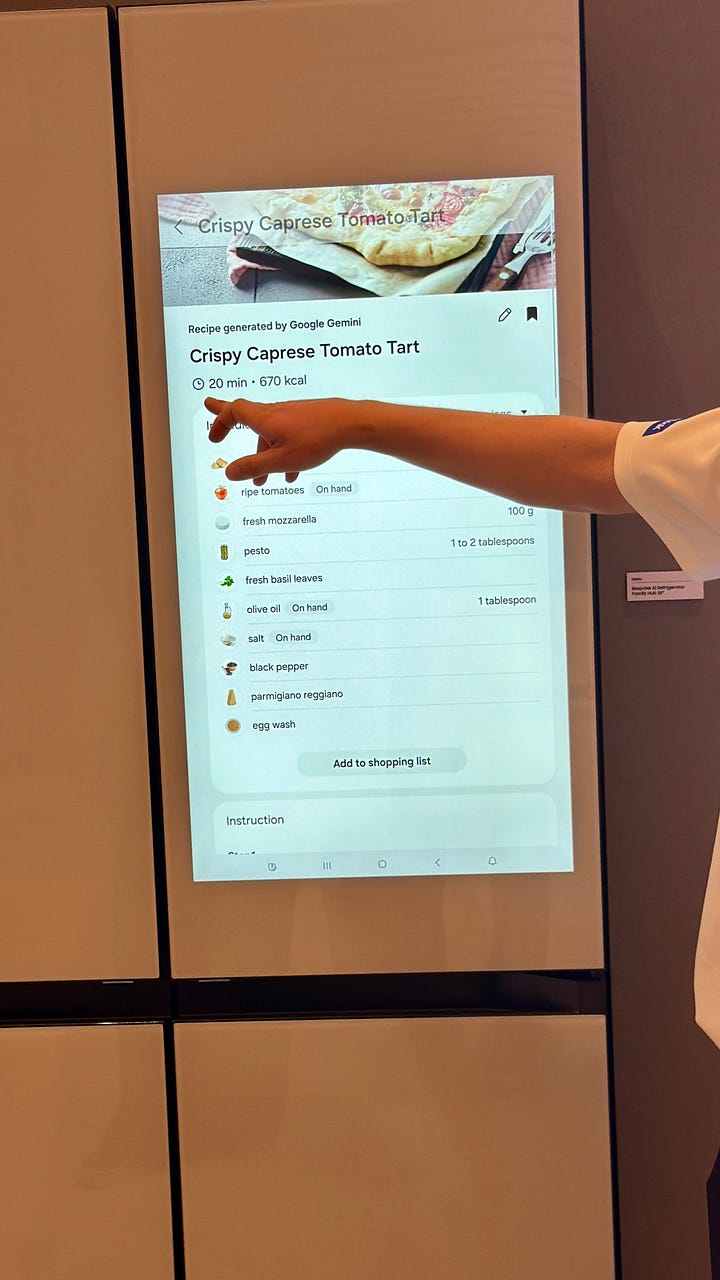

Everyone has stared into their fridge wondering what to eat. Samsung finally answered the question: “What’s for dinner tonight?”

The AI Family Hub Fridge has a camera inside that captures everything you add or remove. It doesn’t just scan barcodes; it sees:

Handwritten labels on leftovers.

Product packaging from any brand.

Unlabeled food (it correctly identified a bowl of blueberries on the shelf).

It tracks what you actually eat, suggests recipes based on inventory, and connects to Instacart when you’re low.

Having your fridge suggest options based on what’s actually in there and what you actually like to eat is the kind of useful that you notice daily. The AI isn’t doing anything flashy, it’s just solving a small problem that happens constantly.

Available now. Check current models here.

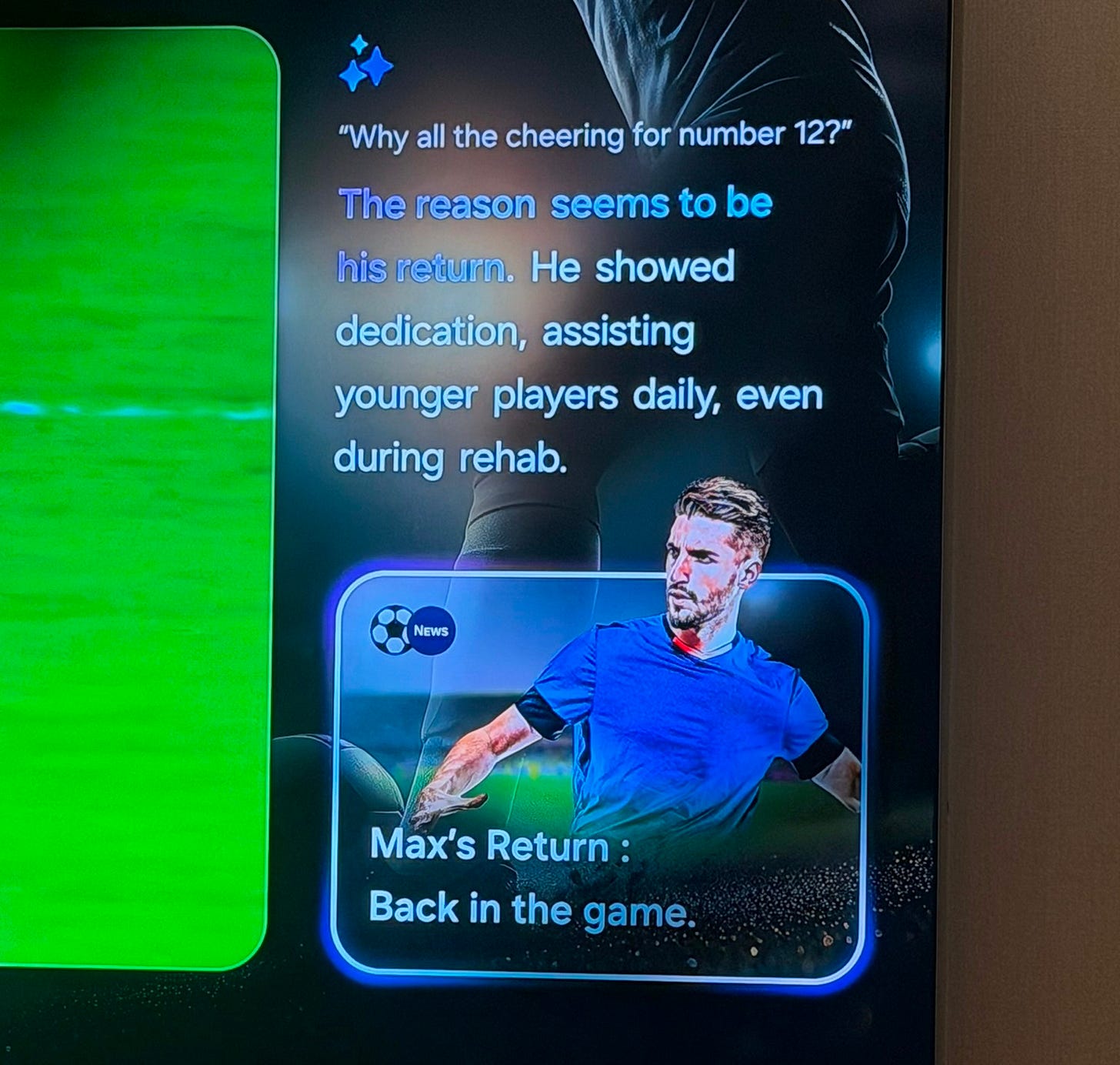

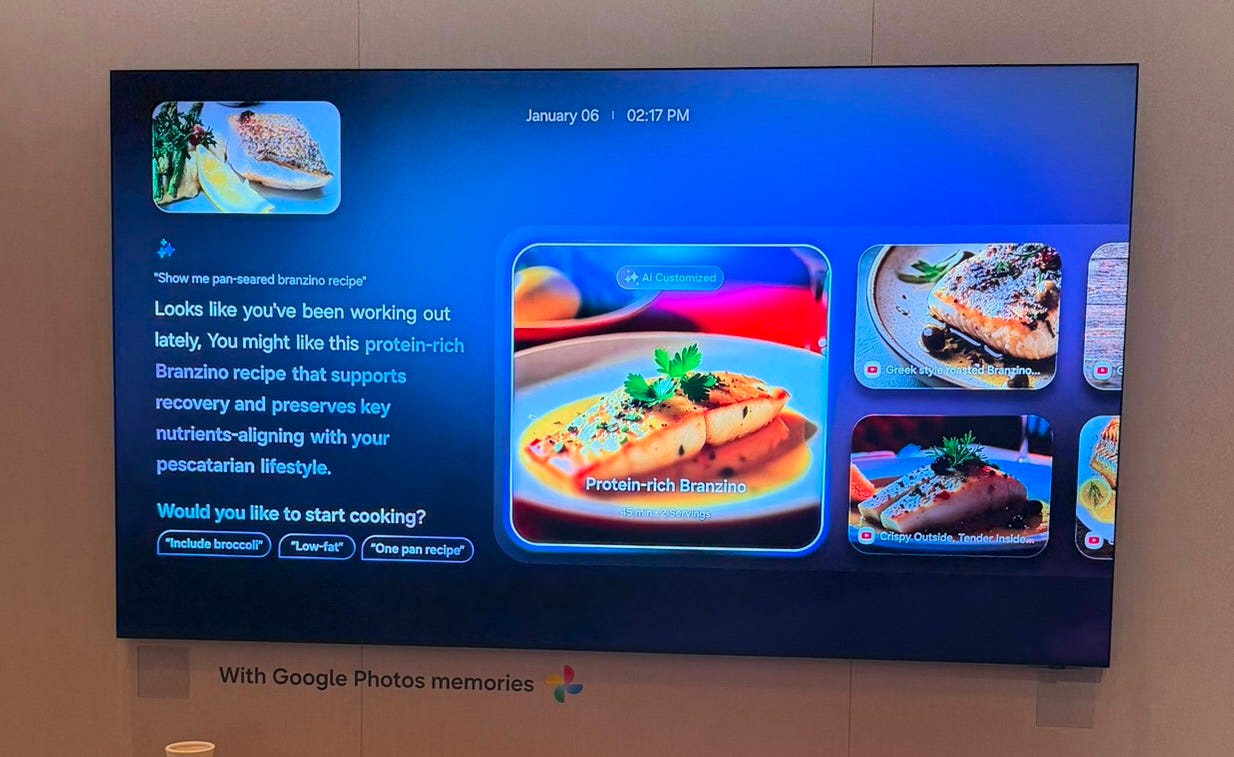

Samsung’s home technology this year focused on AI that works alongside what you’re already doing, rather than demanding your attention.

Their TV lineup includes Vision AI, which lets you ask questions while you’re watching without interrupting what’s on screen. During a sports game, you can ask “why are they cheering?” and get context delivered alongside the broadcast. The game stays playing. You don’t have to pause, switch apps, or pull out your phone. The AI response appears in a panel while your content continues.

You can also use it for queries unrelated to what you’re watching. Need to figure out dinner while the game is on? Ask the TV. It’s a voice assistant built into the display, but the implementation matters: it doesn’t hijack your screen.

The interaction feels natural because it doesn’t interrupt what you’re doing, it just adds information when you ask for it.

Huge for accessibility, and available now.

And Samsung’s AI Mirror scans skin and recommends a personalized skincare routine. It suggests makeup colors that complement specific skin tones. It detected skipped sunscreen and called it out directly. Rude, but fair.

The mirror pays attention to patterns you might miss and surfaces information that’s relevant to you specifically rather than generic advice.

Code AI Hardware Without an Engineering Team

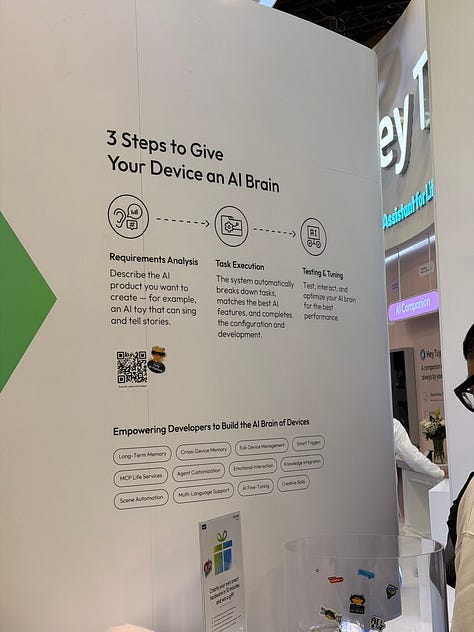

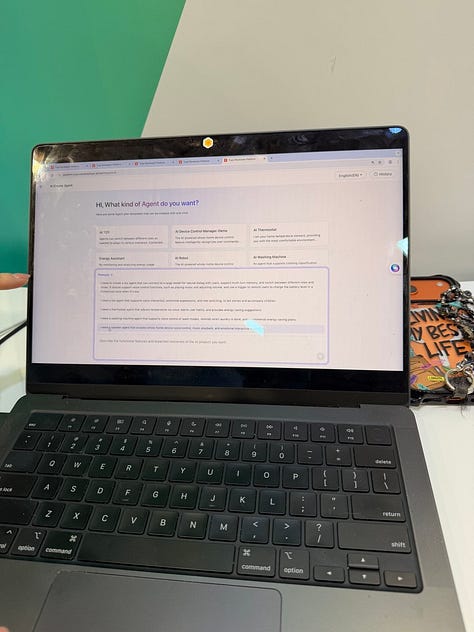

Tuya demonstrated how coding devices is becoming conversational. Configuring smart home devices used to require navigating apps, digging through settings menus, building IFTTT recipes, or writing actual code to get custom behavior.

I built an AI-powered home lighting system manager from a single prompt. Tuya’s Smart Home Platform is like vibe coding, but for physical devices. At the Tuya demo, describing “I want to manage lighting at home” resulted in a prompt written and installed on a physical device. Saying “dim the lights” after that and the device handled it.

This is a meaningful shift in who gets to customize their environment. Smart home technology has been available for years, but actually getting it to do useful things required either technical skills or accepting whatever defaults the manufacturer programmed.

The promise was personalized automation; the reality was usually generic routines that didn’t quite fit how you actually live. Describing what you want in plain language and having the system configure itself to match closes the gap between what smart home technology promised and what it actually delivered.

The thread connecting all of these appliance announcements: AI that understands context and adapts to you rather than requiring you to adapt to it. The TV understands you’re watching a game and responds accordingly. The fridge understands your eating patterns and makes relevant suggestions. The mirror understands your skin and gives you specific advice.

The smart home understands what you’re trying to accomplish and configures itself to help. It’s automation aligned with how you live, not the other way around.

AI You Wear

David Kyazze and I also got the chance to test out Rokid AR glasses on the floor, and they’re worth mentioning separately from the home tech.

The standout feature is real-time translation. I had a conversation with someone who spoke Mandarin while I spoke English. The translation appeared on the lenses as we talked. No pulling out a phone, no waiting for an app to process. Just continuous conversation across a language barrier.

For creators, these work like an invisible teleprompter. Load your script, deliver on camera, and no one watching can tell you’re reading. The screen is only visible to you.

There’s also a built-in AI assistant that provides visual context. Ask “what am I looking at?” and it tells you. That’s useful in unfamiliar environments, and it can be especially helpful for people who benefit from additional visual context when navigating new spaces.

Massive for creators. Massive for accessibility. Massive for anyone who travels internationally and doesn’t want language to be a barrier.

What This Means

The robots are here. They’re helpful. And they’re just getting started.

CES has always been about showing what technology could do someday. This year it showed what technology does right now: in your home, in your car, on your laptop, without asking permission or waiting for a connection.

This year’s CES didn’t show what AI might do someday. It showed what AI already does:

video generation without cloud uploads

private, on-device recognition

robots that learn your schedule

appliances that understand your habits

devices that configure themselves from natural language

The infrastructure finally caught up. Local AI. Hybrid systems. Open models you control.

AI in physical space requires perception, reasoning, coordination, and judgment. These aren’t chatbots with arms. They’re systems that understand their environment and act.

The shift from AI hype to practical implementation is refreshing. CES always reveals where the tech is actually heading versus where the marketing wants us to think it's going. Local AI and on-device processing are the real game changers for creators.

“And Samsung’s AI Mirror scans skin and recommends a personalized skincare routine. It suggests makeup colors that complement specific skin tones. It detected skipped sunscreen and called it out directly. Rude, but fair.”

i’m liking all the use cases that are starting to dig in and be specific. don’t get me wrong i love general purpose ai, but this is an example of narrow application that works.