Adobe MAX 2025: ChatGPT + Adobe Express, Premiere Mobile, and Why Multi-Model AI Actually Matters

Chat with your design tools, edit video on your iPhone, and why Adobe's choosing multiple AI models instead of one

The future of design isn’t about new buttons and complicated apps, it’s about conversation. Adobe MAX 2025 made that crystal clear.

This week, we saw Adobe take the big leap: making the interface optional. You’re no longer translating intent into clicks; you’re just describing what you need. And, you’re doing more right from your phone. I tested some of it firsthand at MAX. Think studio-quality audio from a video recorded at Adobe’s Creative Park surrounded by ambient noise and chaos (thanks to Premiere on my iPhone). And professional advertising shots generated instantly from the item in your hand using Firefly.

This is the shift happening across Adobe’s entire ecosystem: interfaces becoming optional, AI meeting you where you already work, tools that understand what you’re trying to do instead of making you translate intent into clicks.

Let’s break down what Adobe announced at MAX and what matters most: the tools you can use today, what’s coming soon to fundamentally change your workflow (like the ChatGPT integration), and the practical reason Adobe’s infrastructure decisions are such a big deal.

TL;DR: I tested Adobe’s new tools at MAX, recorded audio in chaos that came out studio-clean, and watched them preview AI that learns your content strategy. Here’s what actually shipped and what’s coming:

ChatGPT + Adobe Express - Design directly in ChatGPT (coming soon) or use the AI Assistant in Express today (live in beta)

Premiere Pro Mobile - Professional editing on iPhone with AI audio cleanup that actually works (I tested it)

Firefly’s Multi-Model Approach - Choose between Google, OpenAI, Black Forest Labs, and Adobe’s models for different tasks

Layered Image Editing - AI that understands lighting and shadows, not just pixels

Project Moonlight - AI that learns what content works for your audience and suggests what to create next

Project Graph - Visual workflow builder to automate creative processes without coding

Meet Your New AI Design Team: ChatGPT + Adobe Express AI Assistant

This is the bigger shift: you no longer need to be an expert to remember where every tool and button lives. Instead, the AI handles the execution, allowing you to elevate your role to that of a creative director, focusing purely on the vision.

The ChatGPT integration for Adobe Express isn’t publicly available yet. Adobe previewed it at MAX but hasn’t given a release date beyond “coming soon.” When it launches, you’ll be able to generate, edit, and export designs directly within ChatGPT using natural language prompts. No app switching. No learning curve.

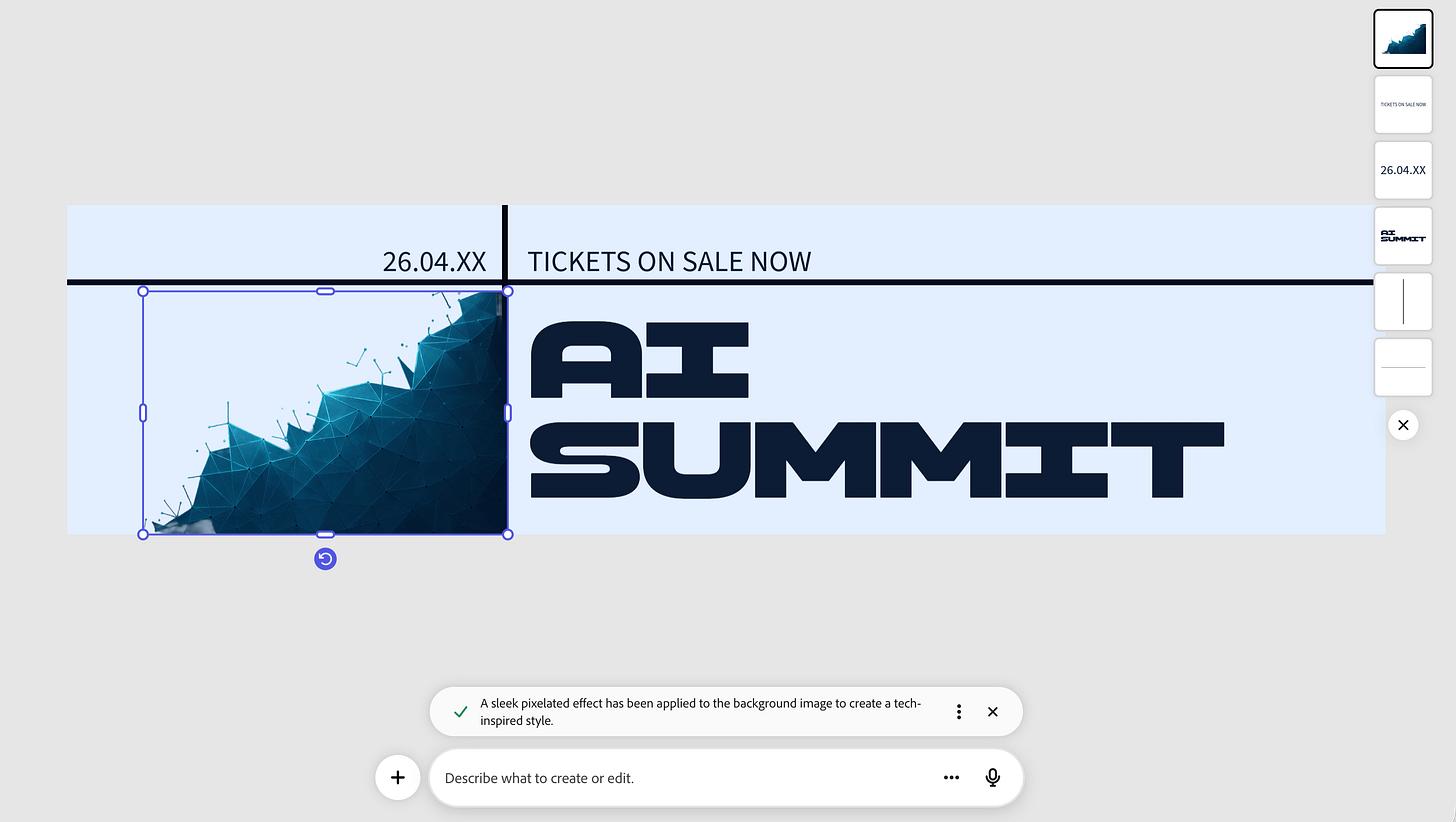

But while you’re waiting for that, the AI Assistant in Adobe Express itself is live in beta now. This is the same conversational approach—just inside Express instead of ChatGPT.

Here’s how it works. You open Express, click the AI Assistant, and describe what you need. “Create an Instagram post for my AI workshop.” The assistant generates design options. You refine through conversation: “Make the background darker.” “Change the font to something more modern.” “Add my logo in the top right corner.” When it’s right, you export.

The interface doesn’t disappear. All the traditional tools are still there if you want granular control. But for many tasks, you can skip the “where does this tool live” phase and just describe what you’re trying to accomplish.

This matters for two groups. First, non-designers who know what they want but get stuck on execution. The barrier isn’t creativity—it’s knowing which menu contains the thing they need. Conversational interfaces remove that friction.

Second, experienced designers who want to move faster. If you know exactly what adjustments you need, describing them in plain language is often quicker than navigating through panels and sliders. It’s not about replacing manual control—it’s about giving you another way to work when that’s more efficient.

The broader shift here is about where creative work happens. Adobe isn’t just improving their apps—they’re bringing their tools to the places you already are. ChatGPT integration is the clearest example. Most creators have ChatGPT open constantly. Instead of forcing you to switch to Adobe’s ecosystem, they’re meeting you in yours.

When the ChatGPT connector launches, the workflow becomes: stay in your chat window, describe what you need, refine the design through conversation, export directly. The creative tool adapts to your workflow instead of your workflow adapting to the tool.

But the AI Assistant available in Express today is the immediate version of this. Same principle, slightly different execution. And it’s ready to test right now.

Premiere Mobile: Professional Editing in Your Pocket

This is what AI should look like: solving real creator problems, not adding new ones. Mobile video recording is convenient but audio quality is always the compromise. You either accept terrible audio or wait until you’re back at your desk with proper editing tools. Premiere mobile with AI-powered voice enhancement removes that tradeoff.

I recorded a quick video at Adobe’s Creative Park during MAX, then tested the voice enhancement feature in Premiere mobile. Here’s the before and after - watch what happens when I hit voice enhancement:

See the full post here:

This matters for creators working in the field, content creators producing daily social content, or anyone who needs to turn around quick edits without waiting for desktop access. The workflow compresses from “shoot, transfer to computer, open Premiere, edit, export” to “shoot, edit, publish” all from one device.

What’s also coming is the Create for YouTube Shorts integration. This will live inside the Premiere mobile app and also be accessible directly from YouTube. You’ll get quick-access templates, transitions, and effects built specifically for Shorts. The goal is to meet creators where they already are (on their phones, on YouTube) instead of forcing platform changes.

Note: Adobe Premiere is available for iPhone users now, in beta. Android support is in development.

The YouTube partnership reveals Adobe’s broader strategy. They’re not just building better tools. They’re integrating those tools into the workflows and platforms creators already use. Premiere mobile isn’t competing with mobile-first video apps. It’s bringing professional editing capabilities to the places where mobile video already lives.

Adobe Firefly: The Multi-Model Ecosystem

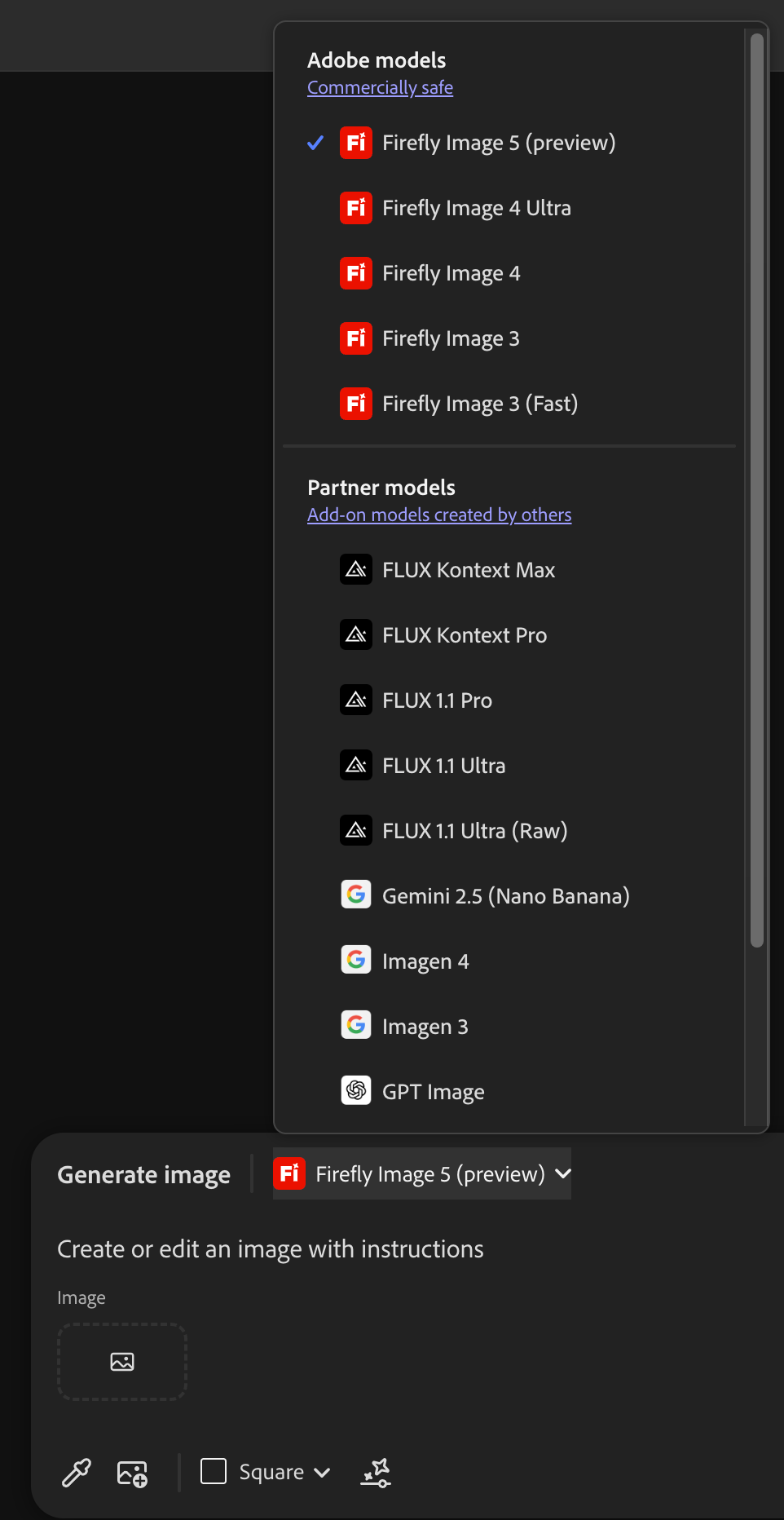

Adobe isn’t building one AI model anymore. They’re building a platform that orchestrates multiple AI models as a system.

Firefly now includes models from Google (Gemini 2.5 Flash), Black Forest Labs (FLUX.1 Kontext), OpenAI, Runway, ElevenLabs, Topaz Labs, and Adobe’s own Firefly models. You choose which model to use based on what you’re trying to accomplish.

This isn’t about having options for the sake of options. Different models excel at different things. Some are better at photorealism. Some are better at specific styles. Some handle text better. Some are faster. Firefly gives you access to all of them through one interface, so you pick the right tool for the specific task.

Firefly Image Model 5, Adobe’s latest, is now in public beta. It generates images at native 4MP resolution without upscaling. It handles prompt-based editing while preserving the parts of the image you didn’t ask to change. This is what Adobe calls “pixel-perfect consistency” and lets you make targeted edits without affecting the rest of the composition.

I tested this in a demo at MAX. We generated product images of a water bottle, and the results looked like professional advertising photography. Clean lighting, realistic reflections, sharp details. No obvious “this was AI-generated” artifacts. The kind of output you could use in actual client work without extensive cleanup. The most impressive part was actually the water bottle label and text - from a picture taken on an iPhone.

Firefly isn’t just image generation anymore. It’s become an all-in-one creative hub. Here’s what it includes now:

Firefly Boards is your collaborative ideation workspace. You can generate images, organize concepts, add notes, work with your team in real-time. The new Rotate Object feature lets you convert 2D images to 3D and reposition them. PDF export and bulk image downloads make it easier to move concepts from ideation to production.

Generate Soundtrack is in public beta. Upload a video, tell Firefly the mood and energy you want, and it composes an original music track synced to your video’s length. The audio is commercially safe—trained on licensed content—so you can use it in client work or monetized content without licensing issues.

Generate Speech is also in public beta. Text-to-speech with natural pacing and emphasis in over 20 languages. You can adjust pitch, pacing, emotion tags, and manually edit pronunciation for specific words. This uses Firefly’s Speech Model and ElevenLabs Multilingual v2.

The Firefly video editor is in private beta. It’s a web-based, timeline-based editor where you can generate clips, organize footage, trim and sequence, add voiceovers and soundtracks, and add titles—all in one place. The idea is end-to-end video production without switching between tools.

In Creative Cloud apps, Firefly integration shows up as multi-model selection. In Photoshop, Generative Fill now lets you choose which AI model runs your prompt. Want a specific aesthetic? Pick the model that’s best at that style. Want maximum realism? Choose the photorealistic model.

Generative Upscale in Photoshop uses Topaz Labs technology to upscale low-resolution images to 4K while preserving detail. This solves a common problem: you have an image that’s too low-res for your use case, but upscaling normally just makes it blurry at a larger size.

Firefly Creative Production is in private beta. This is for bulk editing workflows. If you need to edit hundreds or thousands of images at once - replacing backgrounds, applying consistent color grading, cropping to specific dimensions—you can do it through a no-code interface.

The multi-model approach is Adobe’s answer to the “which AI is best” debate. The answer isn’t one model. It’s the right model for each specific task, coordinated through infrastructure that makes switching between them seamless.

Every model in Firefly is either Adobe’s own (trained on licensed content) or integrated through official partnerships. This matters for professional and commercial use. If you’re creating assets for clients or monetized content, using models trained on licensed data reduces legal risk.

The practical difference: you’re not locked into one AI’s capabilities or aesthetic. You can use Google’s model for one task, Black Forest Labs for another, Adobe’s for a third - all in the same project, all through the same interface.

This is what Adobe means when they talk about AI as infrastructure. It’s not one feature. It’s the layer that connects multiple AI capabilities into workflows that adapt to what you’re trying to accomplish.

Layered Image Editing: Understanding Scenes, Not Just Pixels

Layered Image Editing means you can select individual elements in an image and move, replace, or modify them while the AI automatically adjusts shadows, reflections, and lighting to maintain realism.

Firefly Image Model 5 treats images differently than previous AI models. Instead of seeing a flat grid of pixels, it understands images as scenes with objects, lighting, and spatial relationships.

Here’s what that looks like in practice. You generate or upload an image. You select an object - say, a lamp. You move it to a different part of the scene. The AI understands that the lamp was casting light on the wall and creating a reflection in a nearby window. When you move the lamp, the lighting effects move with it. The shadow adjusts to the new position. The reflection updates in the window.

Or you remove the lamp entirely. Standard content-aware fill would patch over where the lamp was standing. Layered editing removes the lamp, the warm glow it was creating on surrounding surfaces, and the reflection in the glass. It’s analyzing the scene’s lighting logic and removing the cascading effects of that object, not just the object itself.

This brings 3D scene thinking to 2D image editing. The AI is making assumptions about depth, light sources, material properties, and physical relationships between objects. Then it maintains those relationships when you make edits.

Photoshop also added Harmonize, which is AI-assisted compositing. When you add a new element to an image, Harmonize analyzes the background and automatically adjusts the color, lighting, and shadows of the new object to match the scene. This evolved from a tech preview called “Project Perfect Blend” that was shown earlier this year.

The difference this makes: less cleanup work after AI generation or compositing. Edits that used to require manual adjustment of lighting, shadows, and color matching now happen automatically because the AI understands what should happen physically in the scene.

The limitation is that it’s still making educated guesses about 3D space from 2D images. It won’t be perfect every time. But it’s closer to “understand the scene and maintain its internal logic” than “fill pixels and hope it looks right.”

For creators doing product photography, marketing assets, social content, or any visual work that involves compositing or scene manipulation, this reduces the technical barrier. The focus shifts from “how do I make this shadow look realistic” to “what do I want this scene to look like.”

Project Moonlight: AI That Learns Your Strategy

Project Moonlight is in private beta. It’s not available to test yet. But it’s the most strategically important announcement from MAX for content creators.

Project Moonlight coordinates AI assistants across Adobe’s apps. It analyzes your Creative Cloud libraries: your past projects, your assets, your templates. It also connects to your social media channels and analyzes your content history, your metrics, what performs well with your audience.

Then it uses that information to help you brainstorm and create new content. Not generic suggestions. Suggestions based on what has actually worked for you before.

The difference between current AI and Moonlight: current AI says “Here’s a generic Instagram post.” Moonlight says “Your audience responds well to carousel posts with data visualizations posted on Tuesday afternoons. Your highest-performing posts used your teal and orange color scheme. Want to create another one?”

It’s learning your creative patterns and your audience patterns, then helping you make strategic decisions about what to create next—not just how to create it.

For creators managing content pipelines across multiple platforms, this is the difference between AI as a production tool and AI as a strategic assistant. Production tools help you make things faster. Strategic tools help you decide what to make.

Moonlight isn’t replacing your creative judgment. It’s giving you data-informed suggestions based on your own past performance. You still make the decisions. But you’re making them with better information about what’s likely to resonate with your specific audience.

This also addresses one of the biggest challenges in content creation: consistency. Maintaining a consistent visual style, brand voice, and content strategy across hundreds of assets is difficult. Moonlight helps by learning what “your style” actually is, not from a brand guide you uploaded, but from the content you’ve actually created and published.

When Moonlight becomes publicly available, the workflow changes from “I need to post something today, what should I make” to “Based on what’s worked before, here are three content ideas that are likely to perform well. Want to develop one of them?”

That’s a different kind of AI assistance. It’s closer to having a creative director who knows your work and your audience than having a tool that generates images on demand.

Project Graph: Visual Workflow Orchestration

The strategic move here: Adobe is acknowledging that creative work isn’t just about individual apps anymore. It’s about workflows that span multiple tools. Graph gives you infrastructure to design, visualize, and automate those workflows.

Project Graph is also in preview, not publicly available yet. It’s a visual workflow builder that connects Adobe products.

Think of it like Make.com or n8n, but built natively into Creative Cloud. You drag and drop workflow steps, connect Adobe apps together, and build automated creative pipelines without writing code.

An example workflow: new image uploaded to Firefly Boards → auto-generate three variations → apply brand colors from your library → export to multiple formats → publish to designated social channels. All automated. Visually designed, like a flowchart you can edit.

For creators producing content at scale, this transforms Adobe from “a collection of separate tools” into “a programmable creative system.” Repetitive workflows that currently require manually moving between apps and clicking through the same steps can become automated processes you design once and run repeatedly.

The visual aspect matters. Automation often requires technical knowledge like writing scripts, understanding APIs, debugging when things break. Visual workflow builders make that accessible to non-technical users. You can see the logic of your workflow, understand where it might break, and adjust steps without coding.

This also makes workflows shareable. If you build an effective automation for your content pipeline, you can export it and share it with your team or community. They can import it, adjust the specifics to their brand and channels, and run the same process.

When it launches, the question becomes: what repetitive creative processes are you doing manually that could be automated? What tasks are you doing the same way every time that could be built into a reusable workflow?

That’s a different way of thinking about creative tools. Not “which app do I need for this task” but “what system can I build to handle this entire process.”

What This Actually Means

Here’s the pattern across everything announced at MAX 2025:

Adobe is building infrastructure for AI-native creative work. Not AI features bolted onto existing tools. Infrastructure that changes how the tools themselves work.

Conversational interfaces like the ChatGPT integration and AI Assistants mean you can describe what you want instead of learning where tools live. The interface becomes optional, not required.

Mobile and distribution integration with Premiere on iPhone, YouTube Shorts partnership means creative tools meet you where you already work, not forcing you to come to them.

Multi-model infrastructure (Firefly’s approach) means the right AI for specific tasks, coordinated through one platform. You’re not locked into one model’s capabilities or limitations.

Scene intelligence like layered editing and Harmonize means tools that understand context and physical properties, not just pixel manipulation. Edits that respect lighting logic and spatial relationships.

Strategic learning with Project Moonlight means AI that learns your creative patterns and audience response, then helps you make strategic decisions about what to create next.

Workflow orchestration with Project Graph means connecting separate tools into programmable systems. Automated creative pipelines you design visually without coding.

This isn’t about individual features. It’s about Adobe building the infrastructure for creative work where AI is background technology that works across models, across apps, across your entire process.

The water bottle images I generated in Firefly looked like professional product photography. The video I recorded in Creative Park at MAX sounded studio-quality after one button in Premiere mobile. The Express AI Assistant created designs through conversation. These aren’t impressive demos. They’re AI that integrates into actual production workflows.

The shift isn’t that Adobe added AI features. It’s that they’re building creative tools where the AI layer becomes infrastructure - invisible, reliable, adaptable to how you work.

Some of this is available now. Express AI Assistant, Premiere mobile, Firefly’s multi-model approach. Other pieces (ChatGPT integration, Moonlight, Graph) are coming soon. But together they reveal the direction: AI that adapts to you, not features you adapt to.

Adobe MAX 2025 in Review

Yesterday’s Sneaks showed where Adobe Research is headed: experimental projects that might become features someday. Today’s announcements show where creative work itself is headed.

Adobe MAX 2025 Sneaks: Inside the Research Showcase Where Innovation Meets Comedy

TL;DR: I attended a Q&A with Adobe’s Head of Research, then watched experimental AI projects demo live on stage with a comedian. Here’s what I learned about Adobe’s research process and the wild tech they’re bu…

You can start using some of this immediately. Express AI Assistant is live in beta. Premiere mobile is available on iPhone. Firefly’s multi-model approach is ready to test. Generate Soundtrack and Generate Speech are in public beta. Layered editing and Harmonize are shipping now.

The tools are evolving faster than most people realize. But the principles stay the same: clear intent, specific prompts, systems thinking. The technology is catching up to how creators actually want to work.

The ChatGPT integration will drop eventually. Until then, the AI Assistant in Express is waiting. Premiere mobile is ready to test. Firefly’s models are ready to generate. The infrastructure is being built in real-time.

Adobe MAX 2025 wasn’t about one big AI announcement, it was about building creative tools that finally speak human.